Trivedi and Cetin hope to speed AI, machine learning with new chip design

Trivedi and Cetin hope to speed AI, machine learning with new chip design

Artificial intelligence and machine learning models used to pilot a drone or assist with robotic surgery require calculations that must be made in real time. These applications demand high accuracy, as mispredictions can lead to catastrophic consequences. To meet this demand, Assistant Professor Amit Ranjan Trivedi and Research Professor Ahmet Enis Cetin are part of a team designing computer chips that will allow for faster, more precise processing of machine learning and artificial intelligence applications. They plan to accomplish this by using a compute-in-memory approach, where processor and storage elements are intertwined.

Traditionally, data is calculated using a processor and storage elements that function separately. Your laptop, for example, stores some information in its hard drive—think of this as a computer’s long-term storage—but uses Random Access Memory, or RAM, as short-term storage. The data held in RAM is available for what you are using or working on at the moment. When you want to play a game or watch a movie you recently downloaded, for instance, the computer pulls the information from RAM into its processor, where it’s computed.

Trivedi and Cetin propose computing the data and storing the data in the same place, eliminating the need for a processor. This will save costs and more importantly, cut down on processing time by eliminating excessive data exchanges.

“AI doesn’t move fast, there are millions to billions of parameters for a complex model, and downloading that from RAM to the processor kills the whole performance. We are designing a new memory that can not only sort the data, but locally compute it,” Trivedi said.

Trivedi and Cetin’s compute-in-memory approach represents a step toward brain-inspired computing platforms. A biological brain doesn’t compartmentalize itself into central processing units or RAM, but coalesces all storage and computing operations in the same structure.

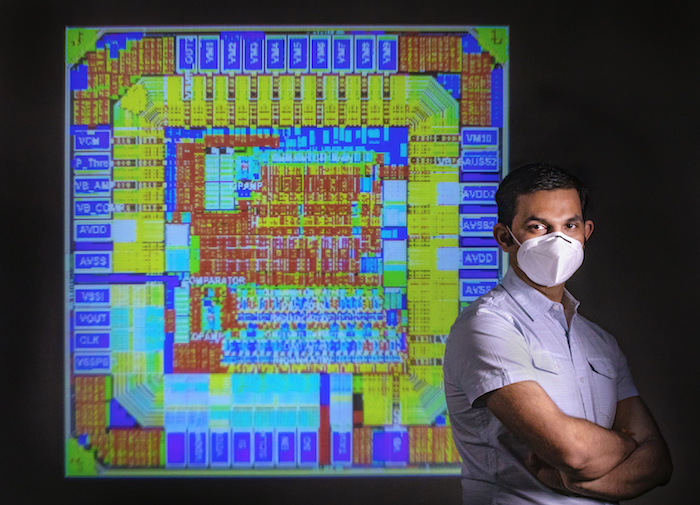

This semester, they are designing the chip and building a proof-of-concept demonstration, to show that both storage and computation can be done in one single unit.

“I think this could be particularly useful for smart home applications, temperature sensors, and other ultra-low-power sensors where a lot of intelligence processing can be done locally,” Trivedi said.

The project, Scalable Multibit Precision In-SRAM Deep Neural Network Processing by Co-designing Network Operators with In-Memory Computing Constraints, is funded by Intel Labs, and runs through June, 2023. Cetin and Trivedi are principal investigators on the grant and are joined by PhD students Shamma Nasrin and Diaa Badawi, a TRIPODS Graduate Fellow. During Fall 2020, Shamma Nasrin was an intern at Global Foundries, a leading semiconductor manufacturer. Under the supervision of Trivedi, Shamma has also won numerous awards, such as Grace Hopper Scholar Award in 2019, Computing Research Association’s graduate award, and the Richard Newton Young Student Fellow Award from Design Automation Conference (DAC). They are joined by mentors from Intel, Wilfred Gomes, an Intel Fellow with Microprocessor Design and Technologies, and Amir Khosrowshahi, Vice President at Intel.