Easing the microchip shortage with a new chip design method via AI

Easing the microchip shortage with a new chip design method via AI

The current microchip shortage has vexed technology and manufacturing companies for over two years.

Chip production was severely impacted by COVID-19 as production facilities closed, and experts predict it could be another year or two before production rebounds.

Meanwhile, the demand for increasingly complex chips has accelerated, with smart features incorporated into an ever-increasing number of products, including washing machines and refrigerators.

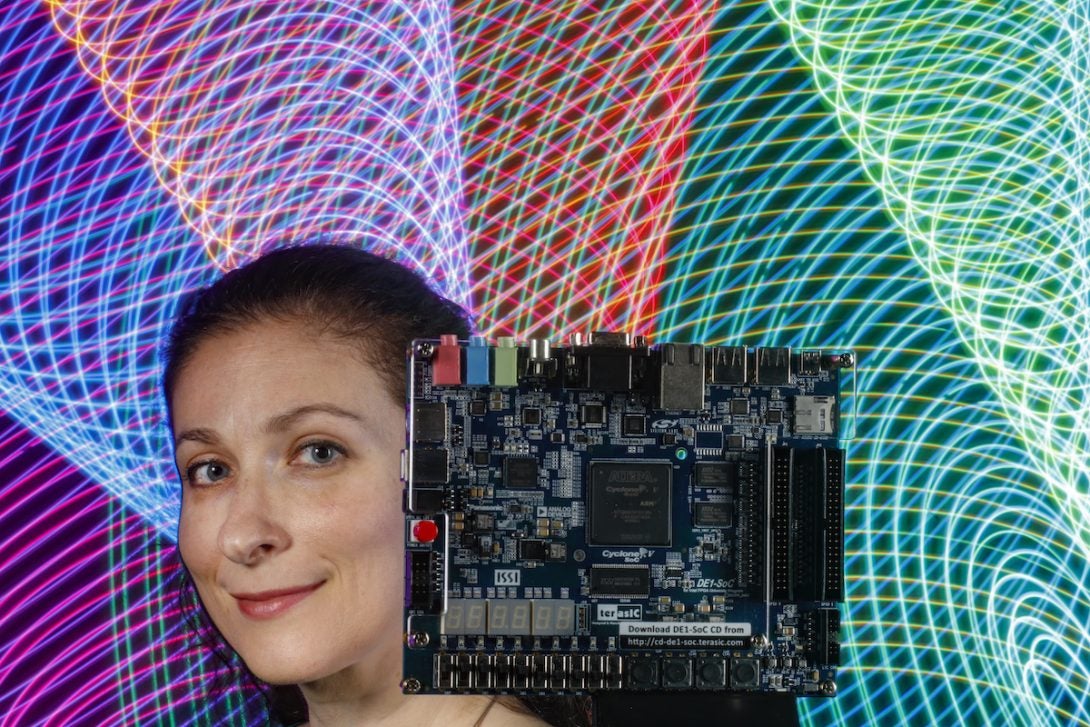

Assistant Professor Inna Partin-Vaisband is working to drastically shorten the design-to-market time for microchip manufacturing by removing a primary bottleneck in the design process with artificial intelligence. Her work on the project is funded by a three-year, $500,000 grant from the National Science Foundation, “End-to-End Global Routing with Reinforcement Learning in VLSI Systems.”

“We have reached stagnation in recent years; there is an increasing gap between the complexity of chips and the time needed to design and bring them to market,” Partin-Vaisband said. “We can’t increase the number of engineers working on this 100-fold. We need a different, more efficient paradigm.”

Manufacturing a chip takes more than three months in dust-free rooms, on multi-million-dollar machines in semiconductor fabrication facilities that often cost billions of dollars to build. To enhance performance, manufacturers are packing more transistors onto a single chip–think billions or even trillions of circuits. Compare that with the Intel’s first microprocessor from 1971, which had 2,300 transistors.

In the past 15 years or so, fast-growing chip design complexity has exceeded electronic design automation capabilities, opening an exponentially increasing gap between the two. Simulations must be run to ensure these circuits work together, as designed, in a process known as convergence. And if the circuits don’t converge, engineers must go back a few steps to fix the problems manually. This failure to know or be able to predict the output quality results in lower performance chips and lost profit if the chips cannot be ready to release to market on time.

Partin-Vaisband is using a fundamentally new approach for circuit global routing, viewing the problem in a different way to the standard design method – by redefining it as an imaging problem. This is achieved by applying deep neural nets – multi-layer computer programs that rely on training data to learn and improve over time, just as a human brain makes connections between neurons. A common example of a deep neural net is Google’s search algorithm, which can finish a search term before you’ve even finished typing it.

Currently, chip design is conducted on a CPU, running through the design steps in a sequential manner, with engineers manually fixing problems as they encounter them. Instead, Partin-Vaisband will develop a deep learning framework to accelerate chip design. Just as deep neural nets have been trained to upscale images to a higher resolution and fill in missing details, Partin-Vaisband intends to improve the chip design process by training the model to evaluate thousands or millions of circuit design solutions at a time – orders of magnitude faster.

She has proof-of-concept results on small parts of the chip design process and hopes to expand that at a higher level, resulting in a two-to-three orders of magnitude improvement in design.

“This project can shift physical design paradigms toward a learning-driven, predictable process,” Partin-Vaisband said.